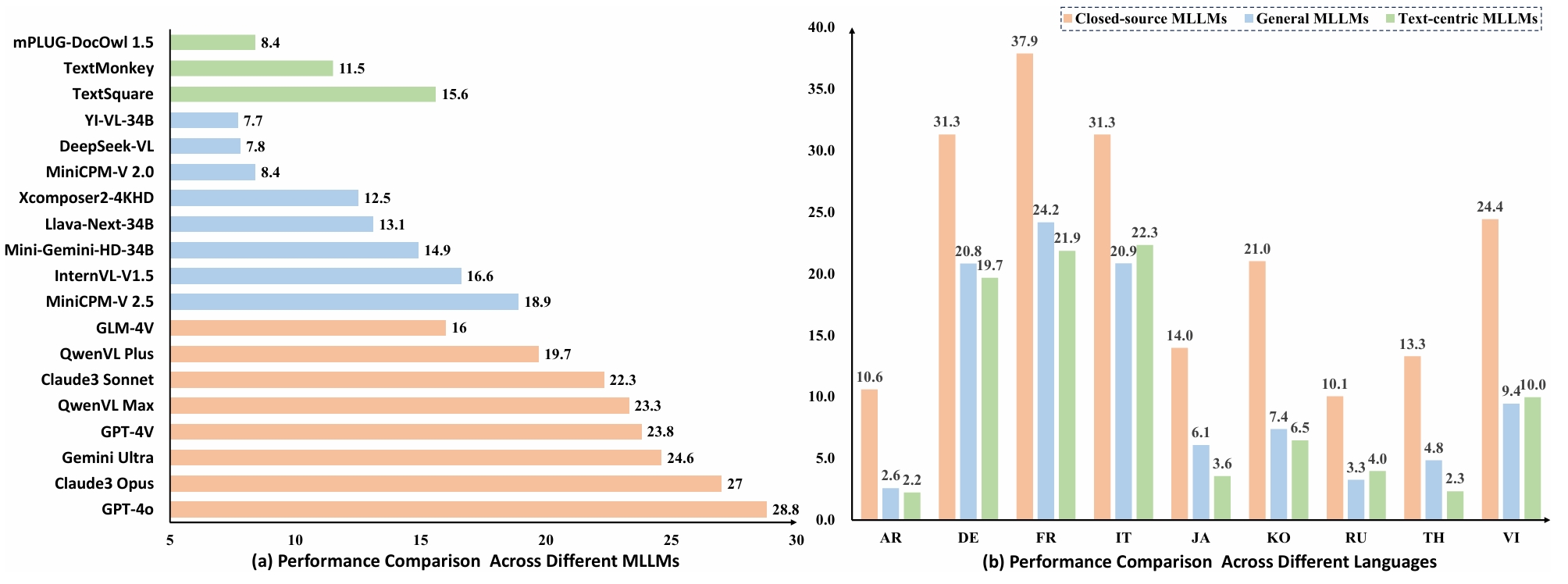

We evaluate various models including LLMs and LMMs. In each type, we consider both closed- and open-source models. We conduct the evaluation experiments over the baseline MLLMs with their default settings, ignoring the effect of generation configuration on the results. To make the output of MLLMs more evaluation-friendly, we design the following prompt format to limit the output length: "Answer the question using a word or phrase in the language of the question. + [Question]", where "[Question]" represents the actual question from the MTVQA test set. This approach aims to make the answers as concise as possible. Besides, InternLM-Xcomposer2-4KHD is chosen as the base model for an instruction tuning experiment on the MTVQA training set.

| Model | Overall | AR | DE | FR | IT | JA | KO | RU | TH | VI |

| GPT-4O(mni) | 27.8 | 20.2 | 34.2 | 41.2 | 32.7 | 20.0 | 33.9 | 11.5 | 22.5 | 34.2 |

| Claude3 Opus | 25.7 | 15.1 | 33.4 | 40.6 | 34.4 | 19.4 | 27.2 | 13.0 | 19.5 | 29.1 |

| Geimini Ultra | 23.2 | 14.7 | 32.3 | 40.0 | 31.8 | 12.3 | 17.2 | 11.8 | 20.3 | 28.6 |

| GPT-4V(ision) | 22.0 | 11.5 | 1.5 | 40.4 | 32.3 | 11.5 | 16.7 | 10.3 | 15.0 | 28.9 |

| Claude3 Sonnet | 21.1 | 10.5 | 28.9 | 35.6 | 31.8 | 13.9 | 22.2 | 11.0 | 15.2 | 20.8 |

| QwenVL Max | 21.1 | 7.7 | 31.4 | 37.6 | 30.2 | 18.6 | 15.4 | 10.4 | 4.8 | 23.5 |

| Xcomposer-SFT | 19.7 | 11.8 | 31.7 | 37.4 | 29.3 | 14.5 | 12.9 | 5.8 | 13.9 | 20.2 |

| QwenVL Plus | 17.8 | 4.8 | 28.8 | 33.7 | 27.1 | 12.8 | 19.9 | 9.4 | 5.6 | 18.1 |

| MiniCPM-V 2.5 | 17.3 | 6.1 | 29.6 | 35.7 | 26.0 | 12.1 | 13.1 | 5.3 | 12.6 | 15.3 |

| InternVL-V1.5 | 14.9 | 3.4 | 27.1 | 31.4 | 27.1 | 9.9 | 9.0 | 4.9 | 8.7 | 12.4 |

| GLM-4V | 13.6 | 0.3 | 30.0 | 34.1 | 30.1 | 3.4 | 5.7 | 3.0 | 3.5 | 12.3 |

| TextSquare | 13.6 | 3.7 | 27.0 | 30.7 | 26.7 | 3.2 | 7.2 | 6.7 | 5.2 | 12.4 |

| Mini-Gemini-HD-34B | 13.0 | 2.2 | 25.0 | 29.2 | 25.5 | 6.1 | 8.6 | 4.1 | 4.3 | 11.8 |

| Xcomposer2-4KHD | 11.2 | 2.0 | 20.6 | 23.2 | 21.6 | 5.6 | 7.7 | 4.1 | 6.1 | 10.1 |

| Llava-Next-34B | 11.1 | 3.3 | 24.0 | 28.0 | 22.3 | 3.6 | 6.1 | 2.6 | 0.4 | 9.8 |

| TextMonkey | 9.9 | 2.0 | 18.1 | 19.9 | 22.1 | 4.6 | 7.2 | 3.2 | 0.9 | 11.1 |

| MiniCPM-V 2.0 | 7.4 | 1.3 | 12.7 | 14.9 | 17.0 | 3.7 | 5.6 | 2.2 | 2.2 | 6.8 |

| mPLUG-DocOwl 1.5 | 7.2 | 1.0 | 13.9 | 14.9 | 18.2 | 2.9 | 5.0 | 2.0 | 0.9 | 6.4 |

| YI-VL-34B | 6.8 | 1.7 | 13.5 | 15.7 | 12.1 | 4.8 | 5.2 | 0.8 | 3.5 | 4.1 |

| DeepSeek-VL | 6.6 | 0.6 | 14.2 | 15.3 | 15.2 | 2.9 | 3.8 | 1.6 | 0.9 | 5.2 |

Overall results of different models on the MTVQA test set. The best-performing model in each category is in-bold. The performance is measured using accuray.